Starting from Haddop 2.4, HDFS can be configured with ACLs. These ACLs work very much the same way as extended ACLs in a Unix environment. This allows files and directories in HDFS to have more permissions than the basic POSIX permissions.

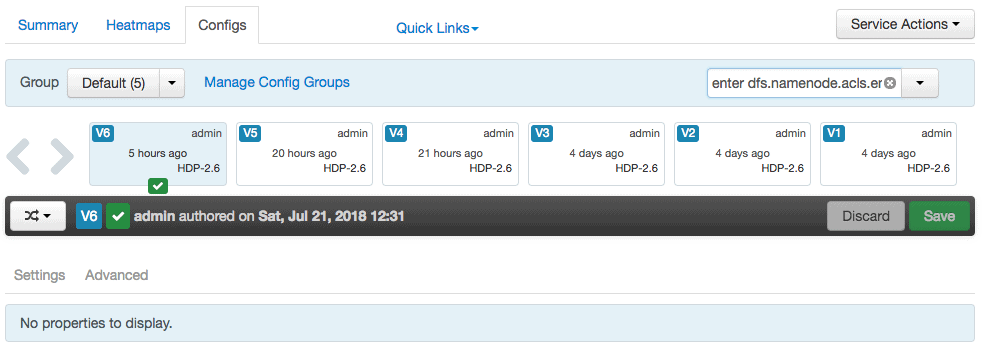

To verify if you have already set the value, go to services > HDFS > config and search for the property “dfs.namenode.acls.enabled” in the search box.

Enabling HDFS ACLs

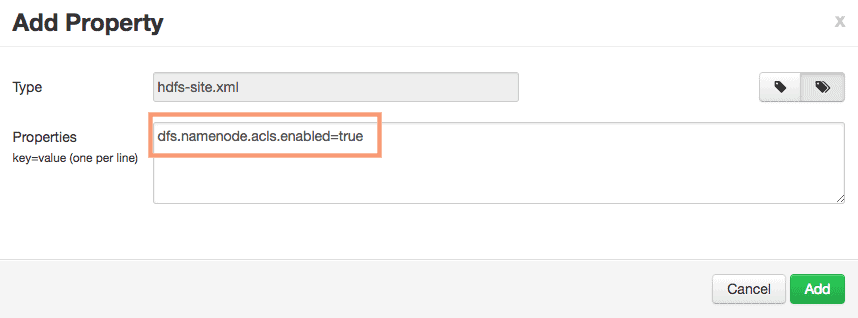

To use HDFS extended ACLs, they must first be enabled on the NameNode. To do this, set the configuration property dfs.namenode.acls.enabled to true in hdfs-site.xml. There are 2 ways to do this but we will use ambari as it is very easy and less error-prone.

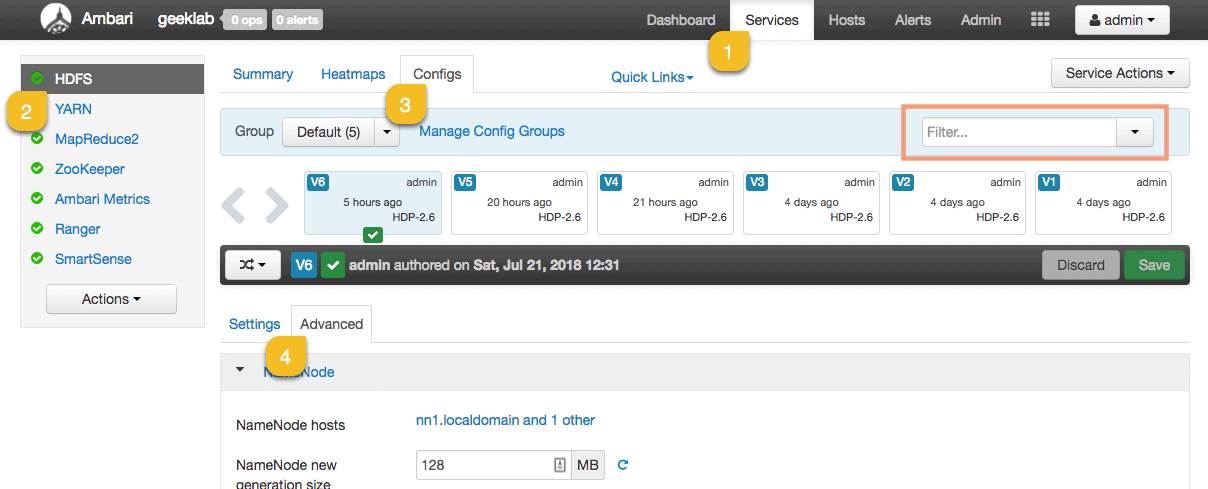

On the ambari UI, goto Services > HDFS > Configs. You can verify the property settings in the search box before proceeding further.

To set the property value, goto Advanced config > Custom hdfs-site and click “Add Property”. Set the property “dfs.namenode.acls.enabled” in the pop-up window and click Add.

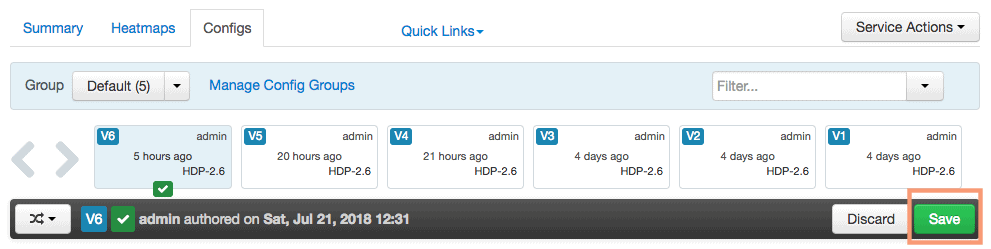

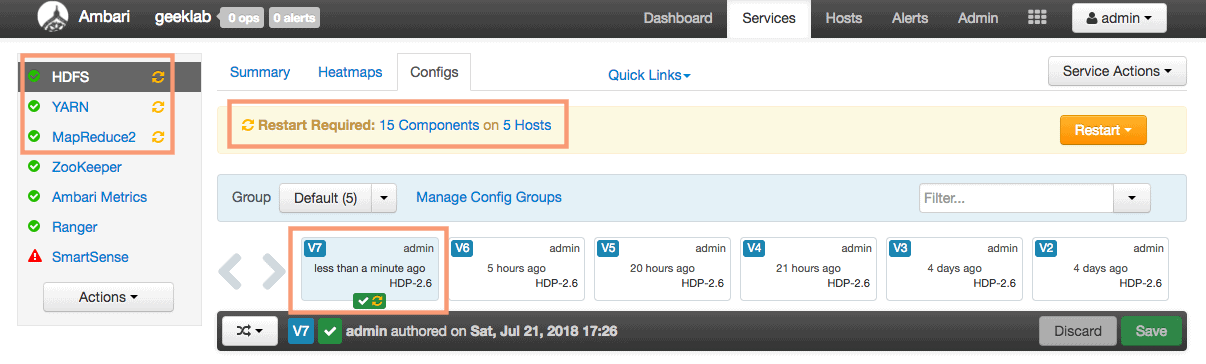

Save the config using the save button for the changes to take effect.

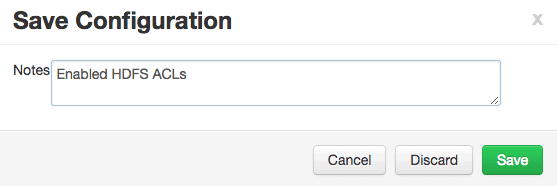

Use an appropriate description while saving the configuration. For example:

We may have to restart a few services after saving the config. In our case, we have to restart HDFS, YARN and MaprReduce2 service. We will restart all the services affected.

Verify

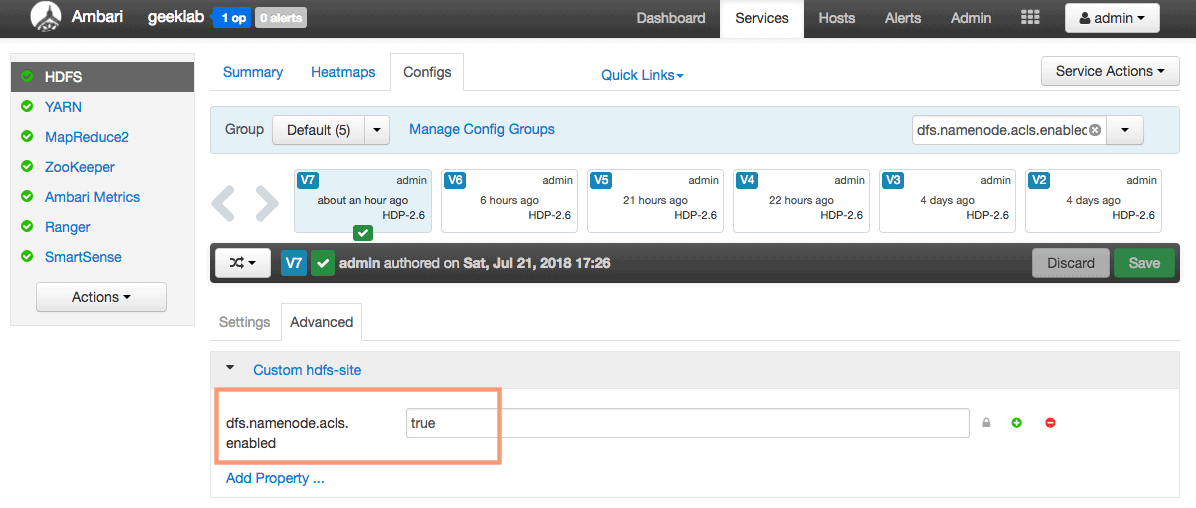

You can verify if the property is set in the config from the ambari UI. Goto services > HDFS > Configs and search for the property “dfs.namenode.acls.enabled” in the search box.

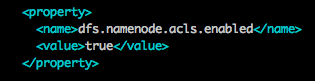

You can also view the file /etc/hadoop/conf/hadfs-site.xml in the namenode and search for the config property “dfs.namenode.acls.enabled” in the file.

# cat /etc/hadoop/conf/hdfs-site.xml

Configuring HDFS ACLs

By default any files is the HDFS do not have ACLs configured onto them. To verfy the current ACLs on the file:

$ hdfs dfs -ls /user/test Found 1 items -rw-r--r-- 3 hdfs hdfs 21 2018-07-21 11:22 /user/test/test_file

$ hdfs dfs -getfacl /user/test/test_file # file: /user/test/test_file # owner: hdfs # group: hdfs user::rw- group::r-- other::r--

HDFS ACLs work exactly in the same way UNIX/Linux ACLs work. To get more info on ACLs you can refer below post.

How to Configure ACL(Access Control Lists) in Linux FileSystem

Let’s configure the ACL on the file by giving user01 r-x permissions and group01 rwx permissions.

$ hdfs dfs -setfacl -m user:user01:r-x /user/test/test_file $ hdfs dfs -setfacl -m group:group01:rwx /user/test/test_file

If you check the ACLs of the file again:

$ hdfs dfs -getfacl /user/test/test_file # file: /user/test/test_file # owner: hdfs # group: hdfs user::rw- user:user01:r-x group::r-- group:group01:rwx mask::rwx other::r--

If you observer closely, you can see a “+” sign after the permissions which confirms that the file has ACL enabled.

$ hdfs dfs -ls /user/test/test_file

-rw-rwxr--+ 3 hdfs hdfs 21 2018-07-21 11:22 /user/test/test_file

Removing ACLs from a file

To remove the ACL from a file/directory completely, use the -b option. For example:

$ hdfs dfs -setfacl -b /user/test/test_file

Verify the ACLs again, to confirm removal:

$ hdfs dfs -getfacl /user/test/test_file # file: /user/test/test_file # owner: hdfs # group: hdfs user::rw- group::r-- other::r--

You can also verify if the “+” sign has disappeared after the regular permissions which also indicates that the file has no ACLs configured with it.

$ hdfs dfs -ls /user/test/test_file -rw-r--r-- 3 hdfs hdfs 21 2018-07-21 11:22 /user/test/test_file