Sometimes we have to remove a cluster node from a live cluster for maintenance or if the node is dying or simply to reduce the size of the cluster. Decommissioning is a process that supports removing a component from the cluster. You must decommission a master or slave running on a host before removing the component or host from service. Decommissioning helps prevent potential loss of data or service disruption. Decommissioning is available for the following component types:

- DataNodes

- NodeManagers

- RegionServers

Decommissioning executes the following tasks:

- For DataNodes, safely replicates the HDFS data to other DataNodes in the cluster.

- For NodeManagers, stops accepting new job requests from the masters and stops the component.

- For RegionServers, turns on drain mode and stops the component.

Pre requisites

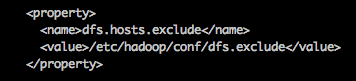

1. Make sure you have the following property to the file /etc/hadoop/conf/hdfs-site.xml:

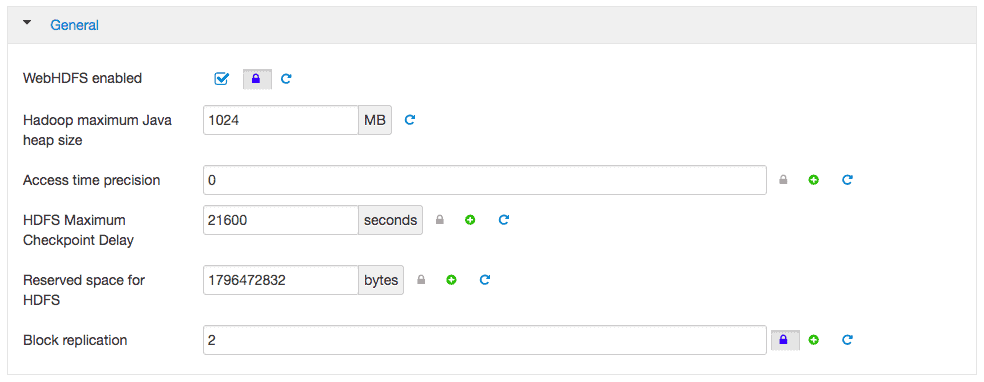

2. Replication factor should be less than the number of datanodes. It means that if you want to remove a datanode in the cluster with only 3 datanodes and a replication factor of 3, it will fail. I have changed the replication factor to 2 as I have only 3 datanodes. It requires the restart of HDFS service.

Decommissioning a datanode

1. First login to the primary namenode (nn1.localdomain) using the user hdfs (or the administrative use of the cluster).

# ssh hdfs@nn1.localdomain

2. The /etc/hadoop/conf/dfs.exclude file contains the DataNode hostnames, one per line, that are to be decommissioned from the cluster. In our case it will be the node dn3.localdomain. We will add this node to the dfs.exclude file.

# vi /etc/hadoop/conf/dfs.exclude dn3.localdomain

3. On the namenode nn1.localdomain, execute the below command to refresh the nodes

[hdfs@nn1 ~]$ hdfs dfsadmin -refreshNodes Refresh nodes successful

4. To verify the decommission status, you can use the “hdfs dfsadmin -report” command.

[hdfs@nn1 ~]$ hdfs dfsadmin -report

Configured Capacity: 25368727552 (23.63 GB)

Present Capacity: 17080078336 (15.91 GB)

DFS Remaining: 16425754624 (15.30 GB)

DFS Used: 654323712 (624.01 MB)

DFS Used%: 3.83%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (3):

Name: 192.168.1.5:50010 (dn3.localdomain)

Hostname: dn3.localdomain

Decommission Status : Decommissioned

Configured Capacity: 12575309824 (11.71 GB)

DFS Used: 218107904 (208.00 MB)

Non DFS Used: 4597932032 (4.28 GB)

DFS Remaining: 7759269888 (7.23 GB)

DFS Used%: 1.73%

DFS Remaining%: 61.70%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 2

Last contact: Sun Jul 15 20:01:52 IST 2018

Last Block Report: Sun Jul 15 18:55:43 IST 2018

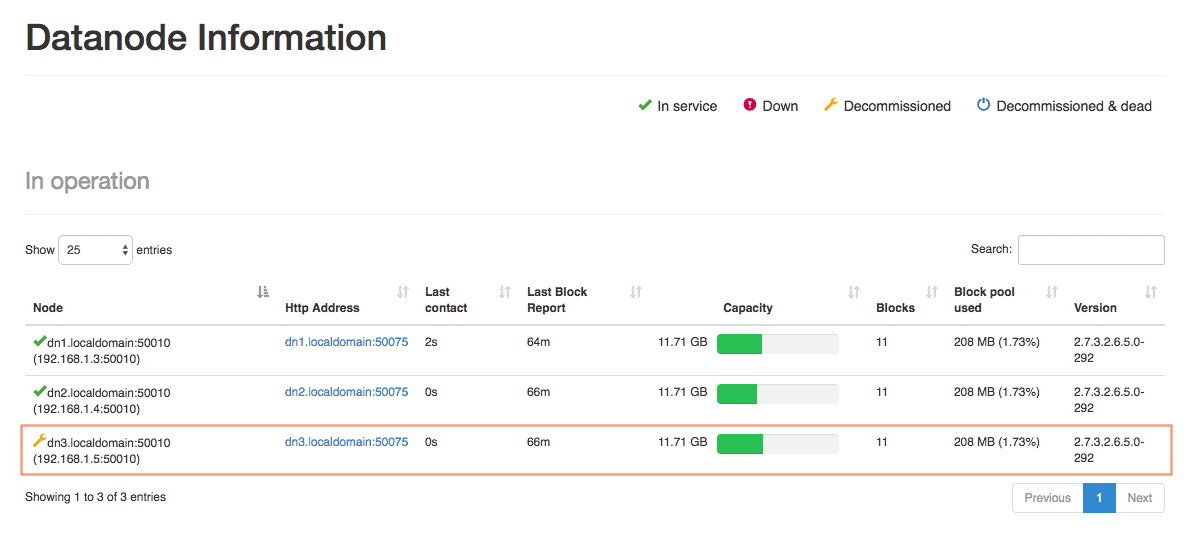

This can be also verified in the ambari-server web UI as well as the namenode web UI under DataNodes page: http://[NameNode_FQDN]:50070.

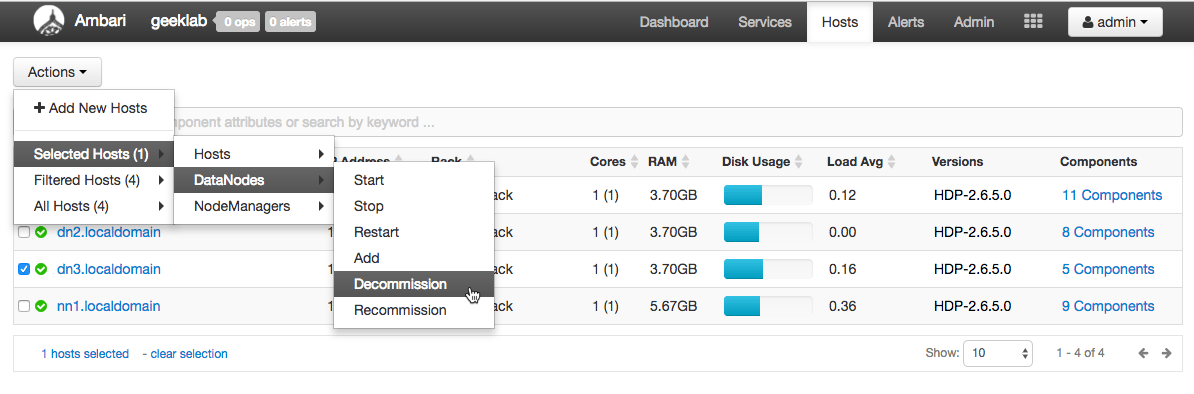

Decommission a node from ambari-server web UI

There is an easy way to decommission a datanode from the ambari-server web UI. Go to the hosts page http://51.15.15.82:8080/#/main/hosts and select the datanode you want to decommission and perform the decommission as shown below.

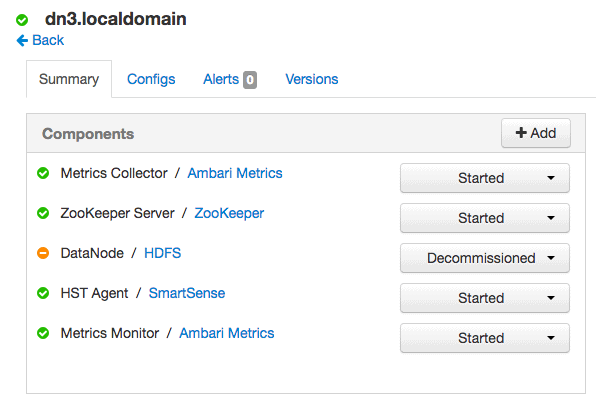

On the ambari server web UI, under hosts tab, select the host you have decomissioned to verify the decommision status.

Troubleshooting

As stated earlier make sure you have enough datanodes to support the data replication factor set. For example, if you have set the replication factor as 3, make sure you have atleast 4 datanodes in the cluster.

You can view the namenode logfile for any errors during the decommissioning. For example:

# tail -f hadoop-hdfs-namenode-nn1.localdomain.log